[CS229] 01 and 02: Introduction, Regression Analysis and Gradient Descent

Tag: python, machine learning

2018-10-21

01 and 02: Introduction, Regression Analysis and Gradient Descent

- definition: a computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E . — Tom Mitchell (1998)

- supervised learning:

- supervised learning: “right answers” given

- regression: predict continuous valued output (e.g., house price)

- classification: predict discrete valued output (e.g., cancer type)

- unsupervised learning:

- unlabelled data, using various clustering methods to structure it

- examples: google news, gene expressions, organise computer clusters, social network analysis, astronomical data analysis

- cocktail party problem: overlapped voice, how to separate?

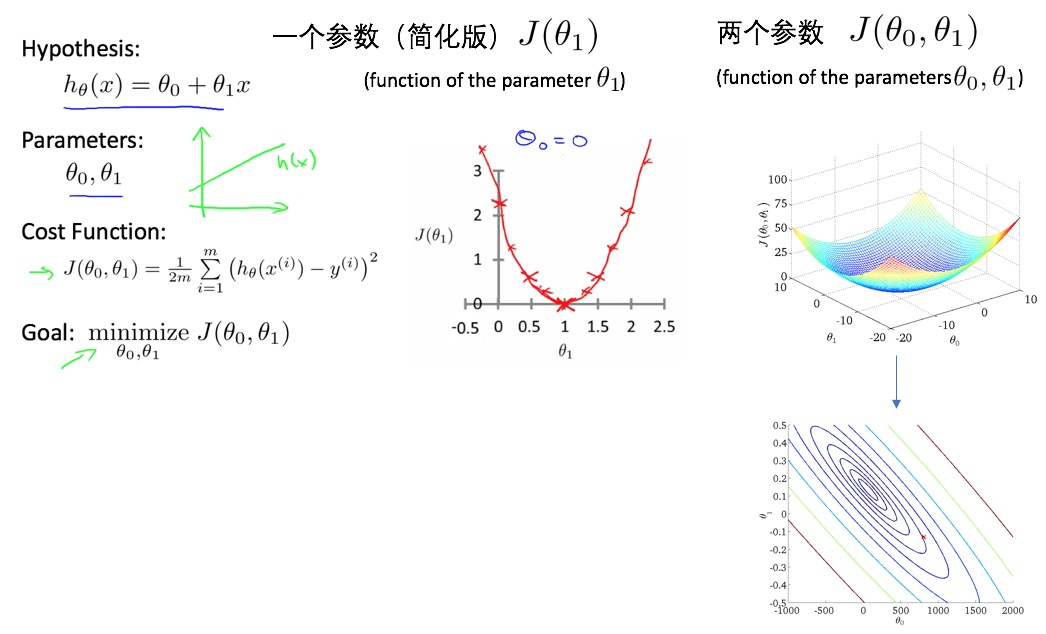

- linear regression one variable (univariate):

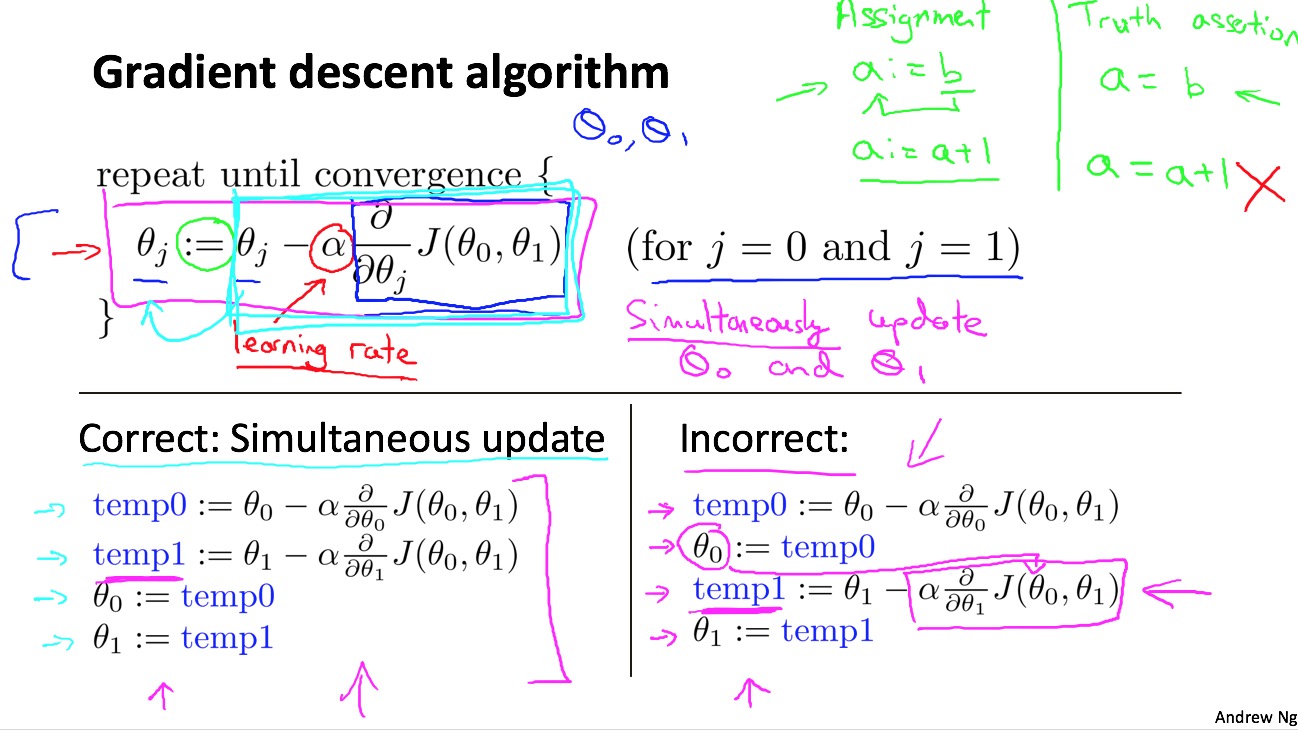

- parameter estimation: gradient decent algorithm

If you link this blog, please refer to this page, thanks!

Post link:https://tsinghua-gongjing.github.io/posts/CS229-01-02.html

Previous:

[CS229] resource

Latest articles

Links

- ZhangLab , RISE database , THU life , THU info

- Data analysis: pandas , numpy , scipy

- ML/DL: sklearn , sklearn(中文) , pytorch

- Visualization: seaborn , matplotlib , gallery

- Github: me